Something I see a lot when talking about performance is "cache locality" (pre-fetching data you're likely to see into one of the caches). I am interested to know the extent to which instruction-level parallelism improves performance relative to writing cache-friendly programs.

This is especially on my mind, because one thing that's covered in EE282 is the fact that the time it takes to access some memory can be up to several orders of magnitude slower than "executing an instruction", so there comes a point where being able to execute more instructions doesn't meaningfully improve the speed / efficiency of a program. I hope this class can teach me how to analyze the speedups from different optimization techniques and where I can get the best "bang for my buck".

@riglesias I think you make a good point in that memory accesses can be far more expensive than arithmetic computations. In that case, I believe we will have to rely on concurrency methods to hide latency when you have a lot of memory access dominating the computation. Designing programs with a good cache hit rate can also hence really help with speedup. I believe there are also methods that prefetch certain data in order to help improve runtime down the line.

To what degree is it possible for compilers to automatically parallelize code written in high-level programming languages and without consideration for parallelization? Will effective parallelization always require the end-user to be thinking about parallelization when writing code?

Adding onto @platypus's question, what's the role of the compiler in automatically parallelizing code? I would think that the compiler plays a huge role since it's able to analyze the entire code end to end

Answering to @platypus question. I am not sure about the capabilities of compilers to parallelize code on high level programming languages (lets say Python). From my understanding about compilers and CPU optimization, each compiler performs optimizations for a specific CPU architecture, being able to optimally use registers. Think about what a compiler does. It produces machine code that is (after linking) translated in an executable that will be run by the CPU. So if this machine code is optimized, I guess that the CPU utilization and parallelization is optimized.

It has to be noted that memory management / allocation performance for low level languages such as C and C++ is very much up to the programmer since it is manual (not the case for higher level languages).

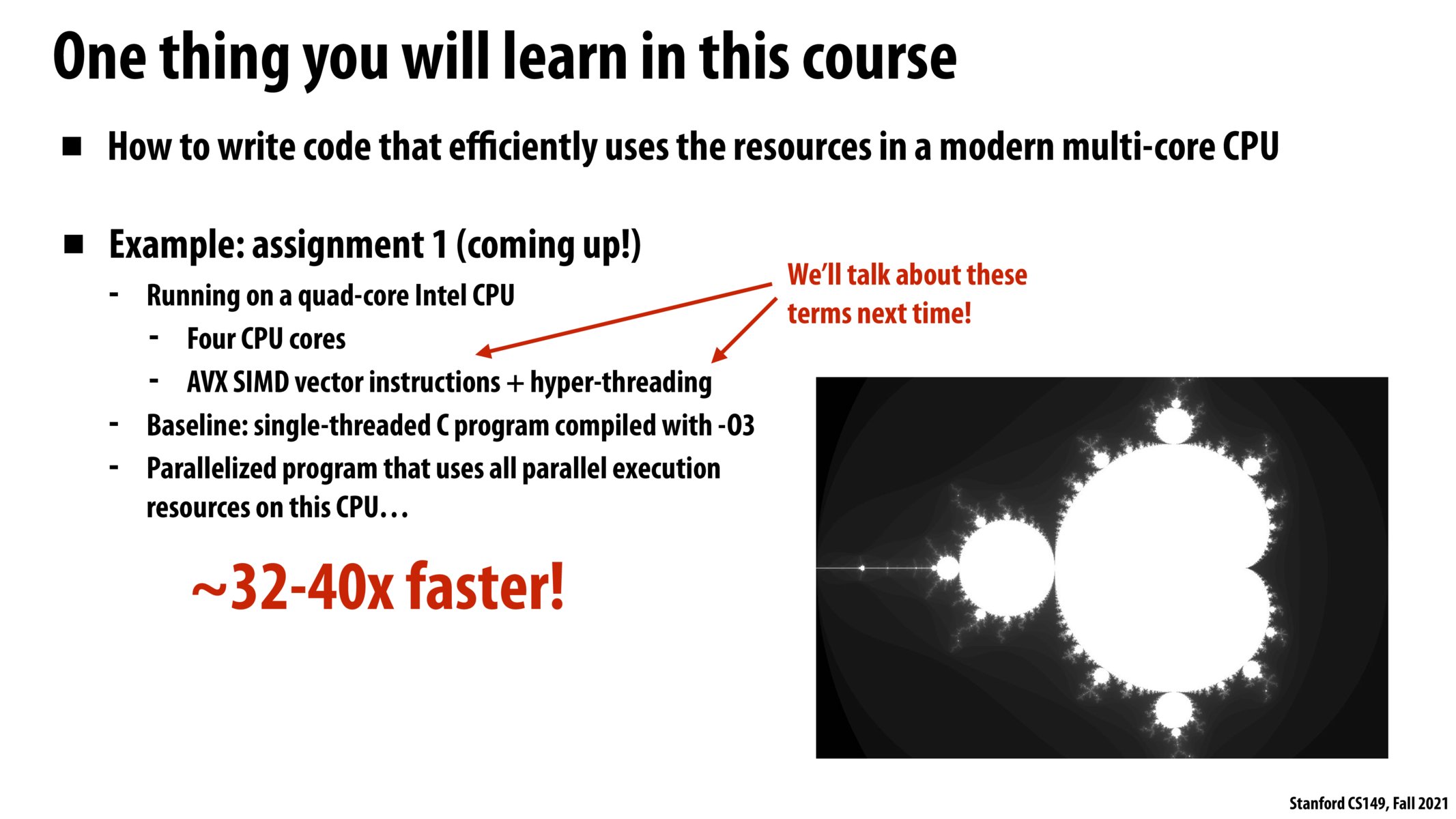

I believe it was on this slide that Kayvon stated during lecture that, even though we think of C code as being the way to go for the best performance we can get, it still pales in comparison to what we can get out of parallel code. I imagine this will make sense soon in the course, but it's my impression that C code is so close to Assembly that I'm struggling to imagine how we could tell the CPU to do something that we couldn't tell it to do in C code. Does C not support certain types of cues/instructions that other languages have? And are those parallel instructions or structures of instructions evident from the Assembly code? At what level(s) can we tell that something couldn't have been written in C?

@spc, I'm not sure this is what Prof. Fatahalian meant, but it could be that it's just really difficult and time-consuming to write well-optimized parallel code in C, while other languages, which have been designed with parallelism (e.g. CUDA) or concurrency (e.g. Erlang) in mind might make it easier for developers to write fast, complex parallel code.

Please log in to leave a comment.

Asked during lecture, but it sounds like we should not expect a 1:1 in terms of new cores to speedup -- 1 --> 4 cores can get us up to 40x faster. One missing ingredient is SIMD which seems like we can execute the same instruction on multiple pieces of data at once.