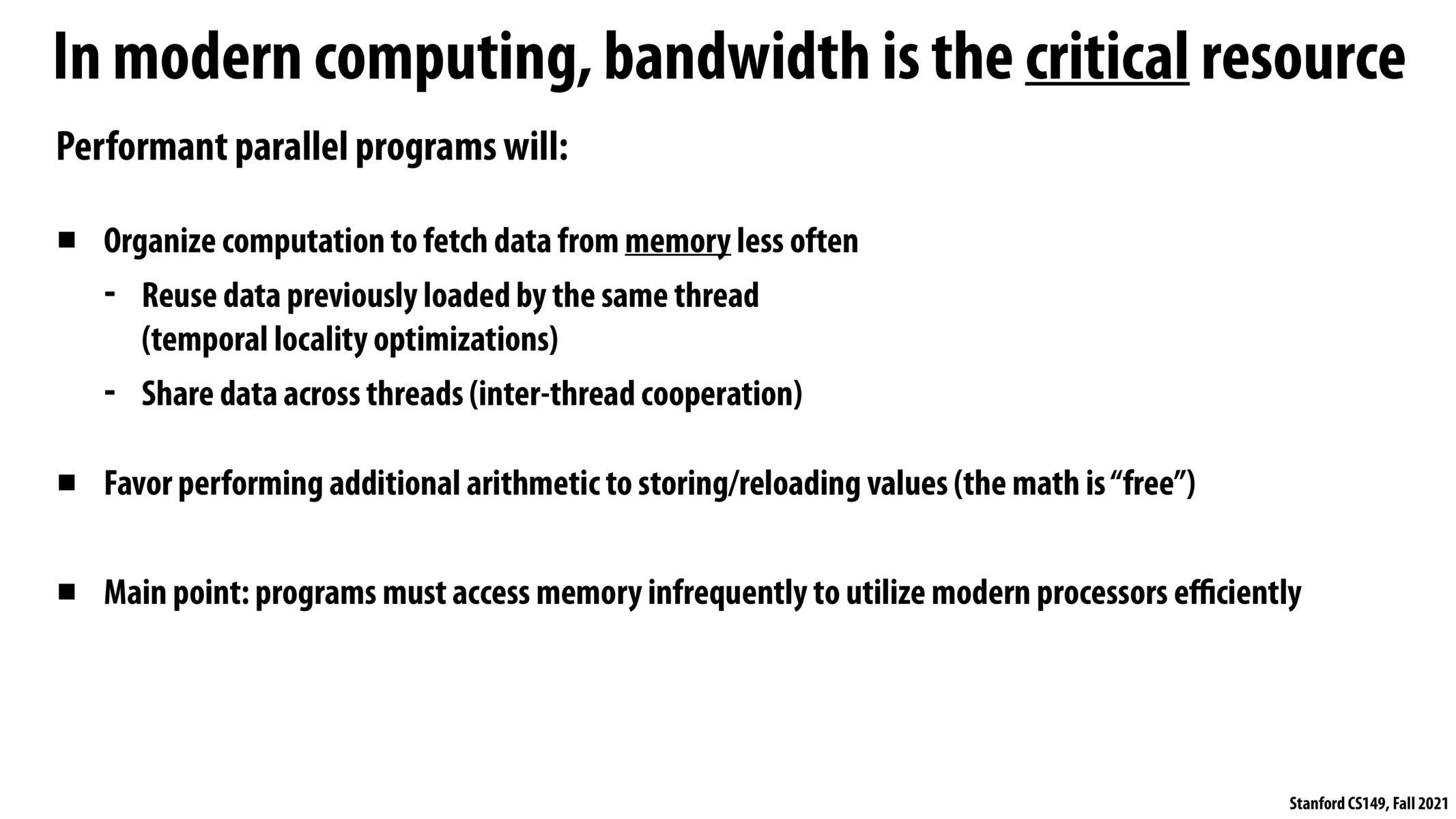

@nassosterz I suppose an issue is that storing a temporary variable could actually become a memory access. The memory bottleneck may actually suggest that recomputing certain values may actually be faster than storing them, but it depends on how the compiler decides to store the variables.

I think this brings up an interesting point between the tradeoff between accessing memory v.s. performing computation. Usually from a programmer's perspective, we often prioritize the program's computation efficiency, while we could actually benefit from performing more computation to avoid excessive memory access. It would be interesting to see the kind of tools available to benchmark / monitor program's performance with respect to memory bandwidth and computation.

I agree with what the others said. In other classes and earlier in this class, I've learned about the impact memory access has on runtime of a program. However I think this lecture really conveyed the point more forcefully. The specific circumstances for GPU's make a lot more sense too, with this understanding of the math and the memory requests.

I could related what's conveyed on this slide (and a couple of slides earlier) with what I read about the M1 chip being amazingly fast. CPUs and GPUs have different memory bandwidth requirements (CPUs it seems work well with lower memory latencies even if that involves a tradeoff by reducing the memory bandwidth, in contrast with GPUs that need higher memory bandwidth even if the memory latencies are high). That is why CPUs and GPUs were originally designed to access separate regions in memory - requiring data that was to be shared between the CPU and GPU - to be copied, making the process slower. The M1s seem to have solved this problem aiding to fast performance by having a Unified Memory Architecture approach - by using a memory that's both low latency and high throughput - thus, removing the need for two separate memories and the copies that had to be made for sharing of data between the CPU and GPU.

Please log in to leave a comment.

Regarding the last point, in my opinion it is something to consider when writing any sorts of code. Even though most of the times we may not be considering accessing memory and we may be keep accessing it, simple optimizations by storing values in temporary variables can increase drastically the performance of a part of a code.

I find that these recap slides help me wrap my head around useful concepts that I later find myself considering when writing code.