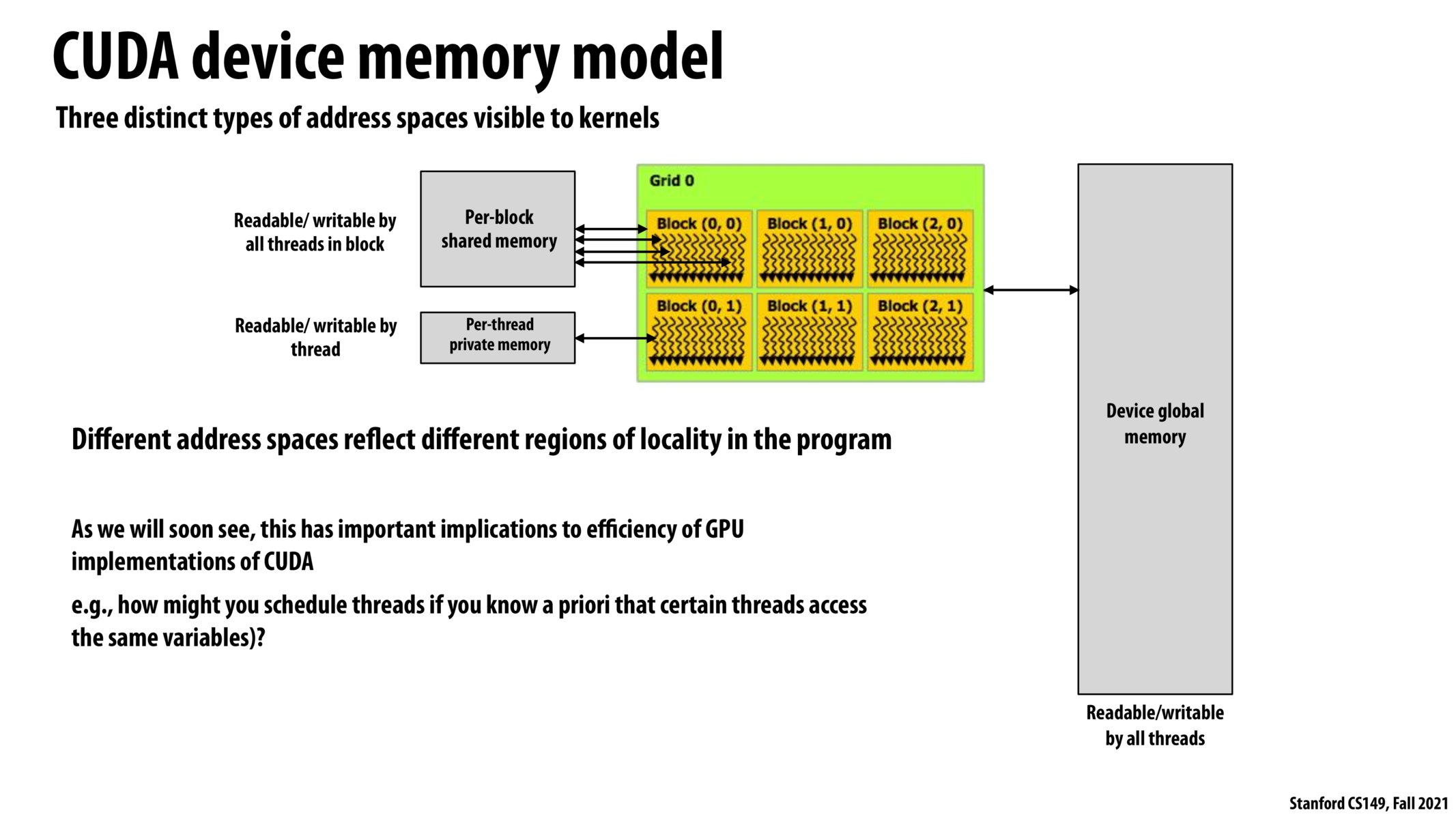

@shivalgo wouldnt the temporal locality for a block of threads be relevant to the device global memory, since the blocks of threads have all access to that part of the memory?

Also, I am thinking whether the abstraction of a block of threads is only useful for the data locality, or if there are other reasons that creators of CUDA decided this approach.

@nassosterz, I think the temporal locality for a block of threads would also be relevant for the per-block shared memory, because in programs with high temporal locality we could have less cache misses (or reduced calls to the global memory). Since global memory is accessible by all threads, it's likely farther away from the thread block and requires synchronization mechanisms to avoid corrupting the data.

Please log in to leave a comment.

If we know apriori that threads share the same variable, then scheduling the entire block of threads on the same processor would exploit spatial data locality. Does it have temporal locality advantages too? I am thinking not, but pondering how to think about temporal locality for a block of threads.