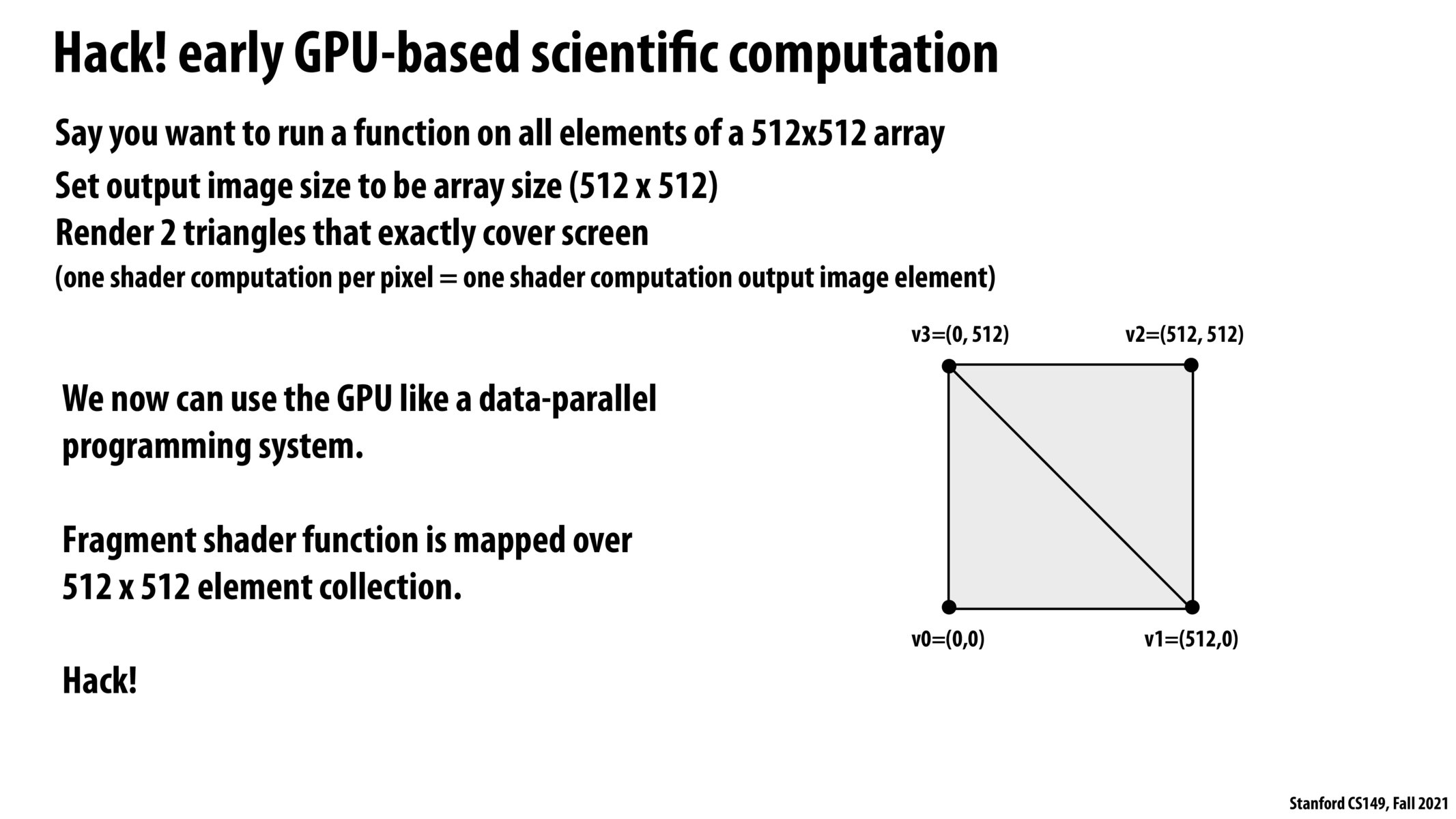

What I don't get is how you get the computed data back out of the GPU in these early OpenGL hacks. Is there an OpenGL function you can use to read back the frame buffer (the area of GPU memory that the GPU would write "drawn images" to) from the GPU memory to the CPU's/system's main memory?

@gmukobi -- yes, you could read the contents of the framebuffer back to the host CPU. That's how you would get the results of your operation.

What's interesting is how you can develop all this awesome computing power, and have the intended use case be just graphics. Maybe it's hindsight bias, but seems like you would want to have a more versatile use case so that there wouldn't be a need to have hacks such as this

"just graphics"—graphics are cool! And in hindsight, the invention of the Graphics Processing Unit for accelerating graphics-related tasks was way before more abstract uses like deep neural networks or large-vectored scientific computing were popular enough to want GPU acceleration and before Moore's law for CPUs started slowing down.

The "hack" mentioned here has an interesting relation to the course's emphasis on the distinction between abstractions and implementations, and I thought it might be worth making this explicit. Sometimes breaking assumptions in the abstraction, to interface directly with the implementation ("hacks"?), can lead to pretty cool stuff like the "GPGPU" ideas, exposing the need for better abstractions. Interestingly, it would seem that having such a leaky abstraction is kind of necessary, or at least helpful, for such developments..

This is kind of interesting to think about but why exactly were the original GPUs in this era forced to only do a computation per pixel on the screen? Why could it not abstract away from the pixels in the output array of triangles. Is this due to some sort of hardware design implementation that couldn't be modified using software, or is there some sort of other reason this was the case?

Why does it have to be two triangles? Can we just think of it as a square of 512*512?

@pis I think it's triangles because GPU hardware is set up to be accelerated for rasterization of triangles, for which there are closed equations to determine whether a pixel is within the 3 points of a triangle and how close it is to each of the 3 points (for interpolation of vertex data). Those equations get unnecessarily more complex (and thus slower) when you try to support arbitrary quads, especially when a quad (like the whole screen) is really just 2 tris.

Please log in to leave a comment.

This hack is awesome.