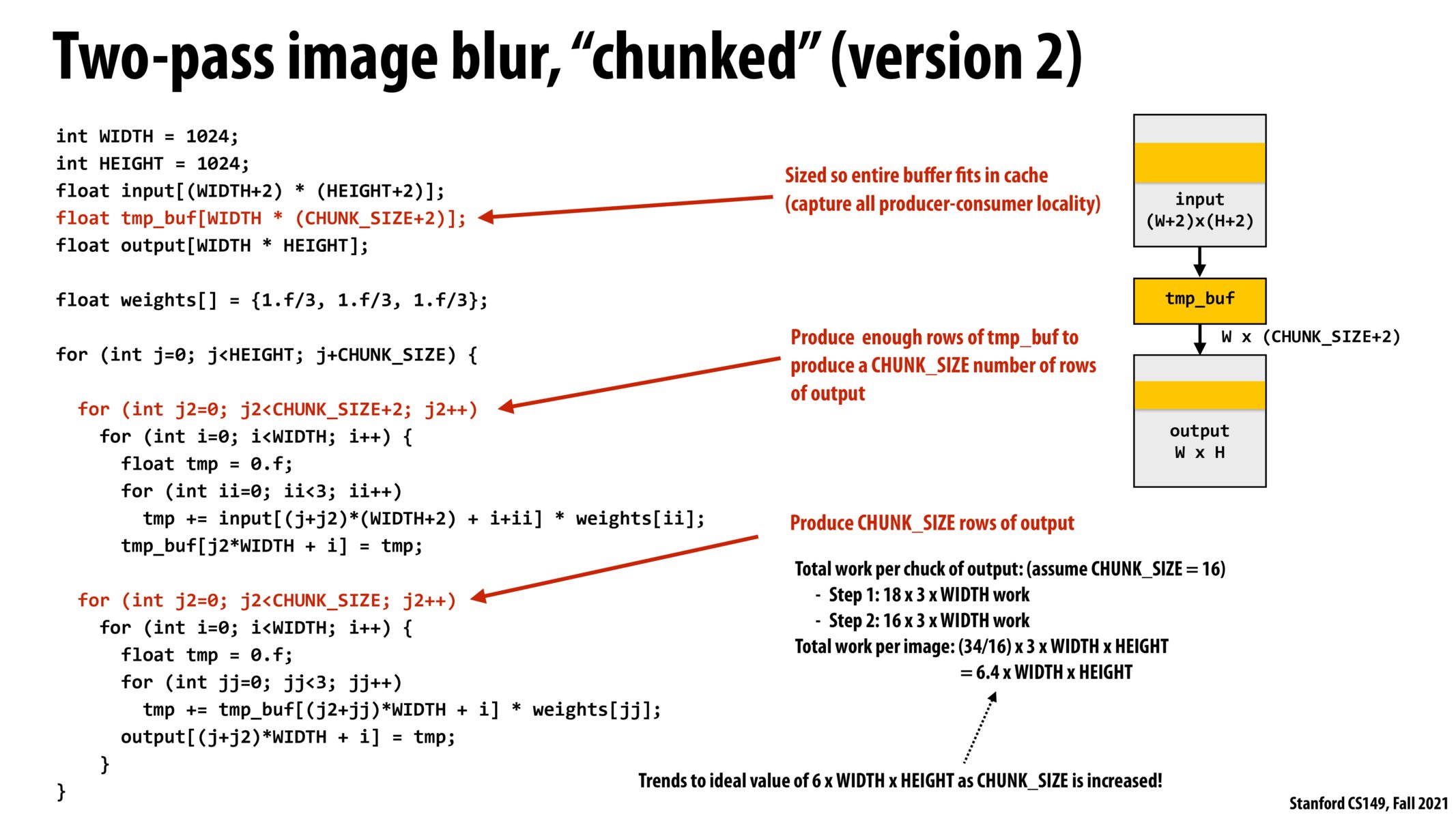

Is the reason we need CHUNCK_SIZE+2 because to compute the first and last rows, we need the one before and the one after?

@beste yes the input needs to be zero padded if you want your output to have the same shape as the input. This is an example of a convolution operation - if you're familiar with convolutions in TensorFlow or Pytorch - there is a padding option to preserve the input size.

Here we are convolving an image of size 1024 with a filter of size 3 so the output shape will be 1024 - 3 + 1 = 1022. So to preserve the shape you need to pad your input to be of size 1026 or CHUNK_SIZE+2.

O(N^2 + N) work when chunk size is small O(2N) work when it's sufficiently large

By reducing the size of image we go over in each iteration, we are making the tmp_buf smaller to fit into cache. Thus, chunked version of the code not only reduces the memory allocation of tmp_buf but also reduces the latency of loads/stores by accommodating for more cache hits. The chunk size can be as small as N in the filter size NxN. But having a chunk size of N makes the amount of work worse. (N^2 + N) * width * height

Please log in to leave a comment.

The code here benefits from the fact that we can increase the CHUNK_SIZE to amortize the cost of computing (CHUNK_SIZE+2) rows of tmp_buf. In the case where CHUNK_SIZE=16, we only need to consume 18 rows of input to produce 16 rows of output image. In contrast, the previous slide shows the same code but with CHUNK_SIZE=1. In that case, we need to consume 3 rows of input to produce 1 row of output image.

Ideally we'd want the CHUNK_SIZE to be large for best performance. However, we must also make sure that 1) CHUNK_SIZE of tmp_buf can fit in cache so we get all the cache hits, and 2) there are enough chunks so that the threads/cores on the machine all have work to do in parallel.