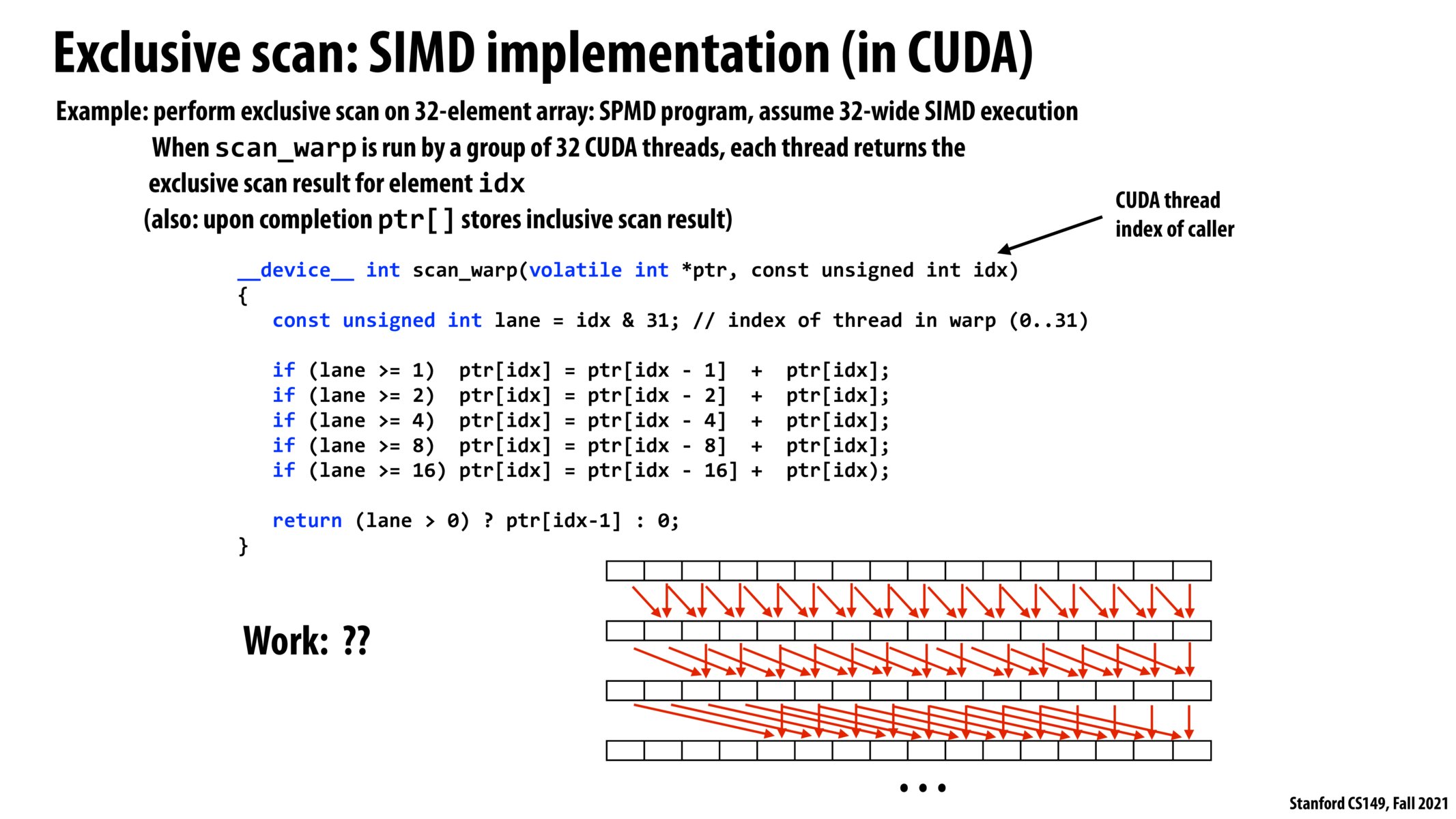

I wonder why we do not need synchronization of threads between the computation for each layer. It seems to me that we cannot compute values for next layer till values at current layer for all indexes (threads) have been computed? I probably missed something...

From Office Hours, I was told that there actually is some very light form of synchronization going on behind the scenes. CUDA can recognize when multiple kernels (or instances of the same kernel) are modifying the same memory address, and essentially "block" for a bit until the first kernel finishes modifying its memory.

I think this has to do with CUDA "streams", but I haven't been able to conclusively show that when you have an array that's touched by multiple invocation of kernels, there's an implicit synchronicity in writing to certain parts of the array (but I'm not sure)

DISCLAIMER: I'm not 100% sure whether this actually applies or not, or if this is pseudo-CUDA that's trying to illustrate the concept without necessarily being completely technically correct, but this is my hypothesis/comment:

From what I've read, this seems like it wouldn't work on higher compute capabilities (7.x+) on the newer chips (Volta & later) because of Independent Thread Scheduling.

Basically, while in earlier compute versions & architectures, it seems like the GPU would execute the warps in lockstep and disallow continued divergence (i.e. converge the warp lanes at the first opportunity), as well as providing some degree of warp-based atomicity for reads/writes from/to memory.

However, for newer architectures, when generating code for newer target compute capabilities, CUDA will actually allow for continued divergence (once a thread diverges, it won't necessarily converge immediately, even if an opportunity is presented), so I'm pretty sure this would require more __syncwarp()s, because theoretically (as far as I can tell), for example, thread 2 could just skip through most of the ifs and return with a half-baked value of ptr[1], (or could potentially diverge in other places? not entirely clear on that). Also, it would probably have to change those fetch-add-stores into something that would allow embedding __syncwarp()s to ensure that the memory accesses are properly ordered.

I'd appreciate it if anyone could toss in an opinion on this!

Please log in to leave a comment.

I was getting a bit confused on the SIMD we went over in CPUs and the new material here - this link and its references helped me contextualize these next few slides a bit better: https://stackoverflow.com/questions/27333815/cpu-simd-vs-gpu-simd